How can I ingest AWS EKS logs to only one log source in Panther?

QUESTION

I would like to configure AWS EKS logs and would like to have only one log source in Panther. I wouldn't want to follow the steps from the relevant documentation and end up having multiple log sources with multiple Firehose streams, S3 buckets, and IAM roles. How can I create a Firehose to bring all the AWS EKS logs from CloudWatch from multiple clusters to only one S3 bucket so that I can use prefixes to differentiate the logs in Panther log source configuration (S3) instead?

ANSWER

It's possible to achieve this configuration, but it requires some manual work and customization of our provided Terraform template/CloudFormation template. EKS can send logs to CloudWatch natively within AWS, and once the logs are in CloudWatch, Panther can use a CloudWatch log source to ingest them. However, each CloudWatch log source will get all the logs from a specific log group.

The AWS documentation for EKS logging mentions the following:

Choose the cluster that you want to view logs for. The log group name format is /aws/eks/_my-cluster_/cluster

This means that each EKS cluster log will go to a new log group.

By default, if you use Panther's CloudWatch log source, it will create a new log source + S3 bucket + Kinesis firehose delivery stream for each log group. If that's undesirable, you can perform some customizations. You could create a Kinesis Firehose delivery stream for each log group but have each of those delivery streams output to the same S3 bucket but with a different S3 prefix.

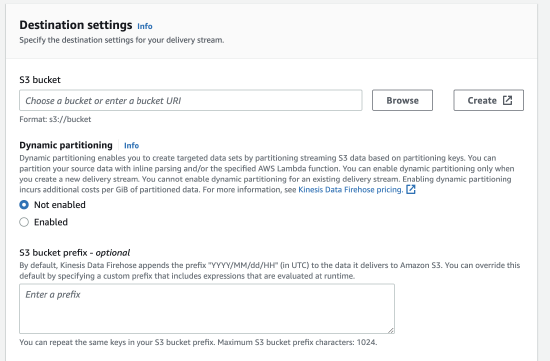

In the screenshot below you can see the setting to control that in the AWS console:

Once the logs are in one S3 bucket, you'd just have to create an S3 log source, and you'd have to set the log stream format to be the CloudWatch logs.