AWS Kinesis: Firehose Delivery Streams combines data into one line to S3. How can Panther ingest the logs?

QUESTION

I have a Kinesis Firehose delivery stream that is sending data to an S3 bucket that I wish to have Panther ingest from. The problem is that Firehose is combining my events all into one line like this:

{"customer_id": 1}{"customer_id": 2}

Can Panther ingest this since each event needs to be on its own separate line?

ANSWER

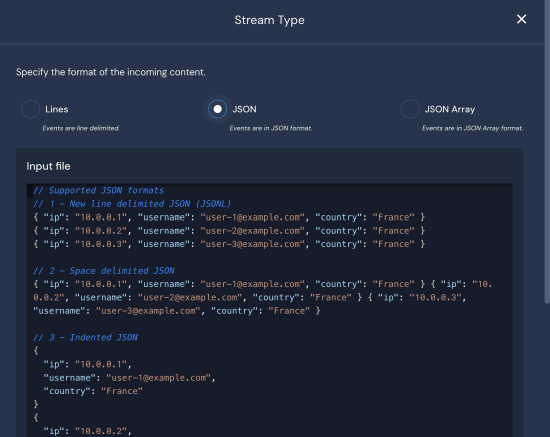

Make sure to choose the JSON stream type instead of Lines as your preferred stream type for your S3 log source. By using the JSON stream type, Panther can automatically separate events without the need for a new line separator.